Searcharoo : Open Source .Net Search Engine

## Post originaly written by Dragon, i have just simplified it more.

Searcharoo is an open-source C#/ASP.NET implementation of a search engine and website spider. This is an open-source project initiated and maintained by Australian Craig Dunn. It runs very fast and provides pretty configurable interface. Over the years it grew to become a full-featured search engine with many of the modern features which became a standard. Some of these are the following:

- stemming

- stop words

- use of robots

- search of phrases from within files such as PDFs, Word, etc.

- geotagging results

- many many more.

You can find the full list of features at the Searcharoo website. The latest version is 7.0 and that is the version I am going to be using in my example. So for the purpose of this example go and download a latest version source code and unzipp it to the folder of your choice. You will get several folders at the destination:

- EPocalipse.IFilter (internal component used by the Searcharoo, no need to change anything here)

- Mono.GetOptions (internal component used by the Searcharoo, no need to change anything here)

- Searcharoo (base Searcharoo library that does most of the work)

- Searcharoo.Indexer (command line application that initiates spider to index a site)

- WebAppCatalogResource (project with single purpose, to build a resource dll that contains two index XML files, so this could work in middle-trust environment, like it is in most hosted websites)

- WebApplication (example of a website that uses Searcharoo, you can use it to figure out how to do it on your website)

## You also need to download itextsharp.dll for pdf reading and indexing. I downloaded 4.1.2 which dosent need any changes in code. If you are good then you may download latest version. Add reference the itextsharp.dll to searcharoo project. With latest version i was getting error like "The best overloaded method match for 'iTextSharp.text.pdf.PRTokeniser.PRTokeniser(iTextSharp.text.pdf.RandomAccessFileOrArray)' has some invalid arguments"

## In visual studio when you open the solution it may upgrade the code for you then it will show all project, now you have to build each project one by one from solution explorer. Right click on each project and click build one by one. You should not get any error.

Building an index (running a spider)

Search engine needs index files to perform a search. An easy and convenient way to create index files is to open Searcharoo.Indexer project in your development environment and open app.config file (this is where all configuration options are stored, this file is key/value pair and it should contain the same settings as a Searcharoo part of the web.config file in a web project), next change options as you need, build the project, and run it from command line. Couple of things to pay attention to when it comes to the app.config and web.config files are the following:

Searcharoo_DebugSerializeXml - this option regulates if the indexer creates bin or XML files. I am used to always set it to 'true', as I can look at the created XML files.

Searcharoo_VirtualRoot - is where the indexing starts from. It can have several URLs separated by ';' for example if you have several subdomains you want to index.

Searcharoo_StemmingType, Searcharoo_StoppingType and Searcharoo_GoType can be used only for English words. If your website is not in English you should set '0' as value for these.

Searcharoo_SummaryChars - this regulates how many chars are displayed in search results for each item in results.

Searcharoo_CatalogFilepath - this is folder path on a local machine where the catalog file is stored, so this should be something like 'E:\webapp\Searcharoo7\WebAppCatalogResource\'

Searcharoo_CatalogFilename - catalog file name, by default it is 'z_searcharoo.xml'

Searcharoo_TempFilepath - temp folder, it can be anything but usually it's the same as the Searcharoo_CatalogFilepath with 'Temp' added to it, so it would go something like 'E:\webapp\Searcharoo7\WebAppCatalogResource\Temp\' ## Dont forget to create Temp folder manually else your spider show error when downloading file. Its the place where files were downloaded temporarily , indexed then deleted.

Searcharoo_DefaultResultsPerPage - how many results are shown in the search results per page. Default value is '10'.

Searcharoo_DefaultLanguage - this is the ISO code of the language that your site is in, for English sites it would be 'en', for others use whatever applies to your site. If you leave 'en' here and your site has non-Latin1 characters (like Cyrillic or eastern European) you will end up without these characters at they will be truncated in the results.

Searcharoo_AssumeAllWordsAreEnglish - obviously this needs to the 'true' for English websites and 'false' for other languages.

Searcharoo_InMediumTrust - set this to 'true' if your site operates in medium-trust environment (large majority of cases).

Searcharoo_DefaultDocument - can set this to tell spider what is a starting page, so it avoids running spider several times over that page. If your website has a starting page (like 'index.html' or 'default.aspx') put that one there, or leave it blank if unsure what to put there.

When you are done with setting these options, you can run the spider for the first time, and if all goes well you should end up with 'z_searcharoo.xml' in location specified in 'Searcharoo_CatalogFilepath' option. That was pretty easy, right? Not so easy.

If you open the xml file you will notice that it is structured the same way as the one given in the Searcharoo 7 website. However, there is one thing missing, and that is a second 'z_searcharoo-cache.xml' file. Searcharoo 7 article specifies structure of this file and how this file is required to be able to show search results with the searched words highlighted as it is with Google search, but the file is never created. I have seen many people asking the question about how to create the file but were not answered and creation of this file is the main reason why I wrote this blog post, since everything else is explained at other places (use Google search to find this information, Google is your best friend when you do development). You will not be able to compile the test solution you downloaded without this file. So, now you have two options, first is to fallback to version 6 which does not need the second file and shows results without highlighting keywords, or see in the following parts of this blog what I did to fix this and have the highlighting appear as expected.

Creating cache index file

There are only a few steps you need to take to create cache file and they are the following:

1. you need to open Spider.cs in Searcharoo project in its 'Indexer' folder. In this file you will need to uncomment all lines related to cache. I am talking about the following lines:

line #47 that initializes instance of a Cache class

you should move to the new line and uncomment code _Cache = new Cache(); // [v7] at line line #135 at the beginning of 'BuildCatalog' method .

line #162 at the end of 'BuildCatalog' method which goes

//_Cache.Save(); //[v7]

should be changed to

_Catalog.FileCache.Save(); //[v7]

2. you need to change Catalog.cs in Searcharoo project in its 'Common' folder in the following way:

line #354 needs to be commented so it would actually read something from this file

That would be all. All you have to now is to rebuild the Searcharoo project and you are ready to go.

Building an index (running a spider) - continued

run Searcharoo.Indexer from command file which will run a spider on the site you specified in its app.config and save it to the place you specified in that file. This operation is followed by logging messages so you see what pages is it inspecting and how many words go to the index, etc. At the end it gives you a nice message that it's finished.

grab the two resulting XML files and put them to the root of the 'WebAppCatalogResource' project. Build that and you will get a dll that you need to reference in your site. Or you can put the XML files directly to the location specified in web.config file.

look at the 'WebApplication' project and 'SearchPageBase.cs' file in 'App_Code' folder for an example on how to do it your site.

And that would be all. A nice thing you can do about this is to put 'Searcharoo.Indexer.exe' compiled file somewhere on your server, create a nice scheduled job that will run once a week or once a day (depending how often something is changed) and you will always have a fresh index that your users can use to quickly find information on your site. Indexing usually takes around a few minutes only for smaller sites and is not a resource intensive operation.

### My indexing was succesfull but i get some error when running webapplication on search.aspx page. the console indexer ran as expected.

Searcharoo is an open-source C#/ASP.NET implementation of a search engine and website spider. This is an open-source project initiated and maintained by Australian Craig Dunn. It runs very fast and provides pretty configurable interface. Over the years it grew to become a full-featured search engine with many of the modern features which became a standard. Some of these are the following:

- stemming

- stop words

- use of robots

- search of phrases from within files such as PDFs, Word, etc.

- geotagging results

- many many more.

You can find the full list of features at the Searcharoo website. The latest version is 7.0 and that is the version I am going to be using in my example. So for the purpose of this example go and download a latest version source code and unzipp it to the folder of your choice. You will get several folders at the destination:

- EPocalipse.IFilter (internal component used by the Searcharoo, no need to change anything here)

- Mono.GetOptions (internal component used by the Searcharoo, no need to change anything here)

- Searcharoo (base Searcharoo library that does most of the work)

- Searcharoo.Indexer (command line application that initiates spider to index a site)

- WebAppCatalogResource (project with single purpose, to build a resource dll that contains two index XML files, so this could work in middle-trust environment, like it is in most hosted websites)

- WebApplication (example of a website that uses Searcharoo, you can use it to figure out how to do it on your website)

## You also need to download itextsharp.dll for pdf reading and indexing. I downloaded 4.1.2 which dosent need any changes in code. If you are good then you may download latest version. Add reference the itextsharp.dll to searcharoo project. With latest version i was getting error like "The best overloaded method match for 'iTextSharp.text.pdf.PRTokeniser.PRTokeniser(iTextSharp.text.pdf.RandomAccessFileOrArray)' has some invalid arguments"

## In visual studio when you open the solution it may upgrade the code for you then it will show all project, now you have to build each project one by one from solution explorer. Right click on each project and click build one by one. You should not get any error.

Building an index (running a spider)

Search engine needs index files to perform a search. An easy and convenient way to create index files is to open Searcharoo.Indexer project in your development environment and open app.config file (this is where all configuration options are stored, this file is key/value pair and it should contain the same settings as a Searcharoo part of the web.config file in a web project), next change options as you need, build the project, and run it from command line. Couple of things to pay attention to when it comes to the app.config and web.config files are the following:

Searcharoo_DebugSerializeXml - this option regulates if the indexer creates bin or XML files. I am used to always set it to 'true', as I can look at the created XML files.

Searcharoo_VirtualRoot - is where the indexing starts from. It can have several URLs separated by ';' for example if you have several subdomains you want to index.

Searcharoo_StemmingType, Searcharoo_StoppingType and Searcharoo_GoType can be used only for English words. If your website is not in English you should set '0' as value for these.

Searcharoo_SummaryChars - this regulates how many chars are displayed in search results for each item in results.

Searcharoo_CatalogFilepath - this is folder path on a local machine where the catalog file is stored, so this should be something like 'E:\webapp\Searcharoo7\WebAppCatalogResource\'

Searcharoo_CatalogFilename - catalog file name, by default it is 'z_searcharoo.xml'

Searcharoo_TempFilepath - temp folder, it can be anything but usually it's the same as the Searcharoo_CatalogFilepath with 'Temp' added to it, so it would go something like 'E:\webapp\Searcharoo7\WebAppCatalogResource\Temp\' ## Dont forget to create Temp folder manually else your spider show error when downloading file. Its the place where files were downloaded temporarily , indexed then deleted.

Searcharoo_DefaultResultsPerPage - how many results are shown in the search results per page. Default value is '10'.

Searcharoo_DefaultLanguage - this is the ISO code of the language that your site is in, for English sites it would be 'en', for others use whatever applies to your site. If you leave 'en' here and your site has non-Latin1 characters (like Cyrillic or eastern European) you will end up without these characters at they will be truncated in the results.

Searcharoo_AssumeAllWordsAreEnglish - obviously this needs to the 'true' for English websites and 'false' for other languages.

Searcharoo_InMediumTrust - set this to 'true' if your site operates in medium-trust environment (large majority of cases).

Searcharoo_DefaultDocument - can set this to tell spider what is a starting page, so it avoids running spider several times over that page. If your website has a starting page (like 'index.html' or 'default.aspx') put that one there, or leave it blank if unsure what to put there.

When you are done with setting these options, you can run the spider for the first time, and if all goes well you should end up with 'z_searcharoo.xml' in location specified in 'Searcharoo_CatalogFilepath' option. That was pretty easy, right? Not so easy.

If you open the xml file you will notice that it is structured the same way as the one given in the Searcharoo 7 website. However, there is one thing missing, and that is a second 'z_searcharoo-cache.xml' file. Searcharoo 7 article specifies structure of this file and how this file is required to be able to show search results with the searched words highlighted as it is with Google search, but the file is never created. I have seen many people asking the question about how to create the file but were not answered and creation of this file is the main reason why I wrote this blog post, since everything else is explained at other places (use Google search to find this information, Google is your best friend when you do development). You will not be able to compile the test solution you downloaded without this file. So, now you have two options, first is to fallback to version 6 which does not need the second file and shows results without highlighting keywords, or see in the following parts of this blog what I did to fix this and have the highlighting appear as expected.

Creating cache index file

There are only a few steps you need to take to create cache file and they are the following:

1. you need to open Spider.cs in Searcharoo project in its 'Indexer' folder. In this file you will need to uncomment all lines related to cache. I am talking about the following lines:

line #47 that initializes instance of a Cache class

you should move to the new line and uncomment code _Cache = new Cache(); // [v7] at line line #135 at the beginning of 'BuildCatalog' method .

line #162 at the end of 'BuildCatalog' method which goes

//_Cache.Save(); //[v7]

should be changed to

_Catalog.FileCache.Save(); //[v7]

2. you need to change Catalog.cs in Searcharoo project in its 'Common' folder in the following way:

line #354 needs to be commented so it would actually read something from this file

That would be all. All you have to now is to rebuild the Searcharoo project and you are ready to go.

Building an index (running a spider) - continued

- Run Searcharoo.Indexer.EXE with the correct configuration to remotely index your website

- Copy the resulting z_searcharoo.xml catalog file (or whatever you have called it in the .config) to the specialWebAppCatalogResource Project

- Ensure the Xml file Build Action: Embedded Resource

- Ensure that is the ONLY resource in that Project

- Compile the Solution -

WebAppCatalogResource.DLLwill be copied into theWebApplication\bin\ directory - Deploy the

WebAppCatalogResource.DLLto your server

run Searcharoo.Indexer from command file which will run a spider on the site you specified in its app.config and save it to the place you specified in that file. This operation is followed by logging messages so you see what pages is it inspecting and how many words go to the index, etc. At the end it gives you a nice message that it's finished.

grab the two resulting XML files and put them to the root of the 'WebAppCatalogResource' project. Build that and you will get a dll that you need to reference in your site. Or you can put the XML files directly to the location specified in web.config file.

look at the 'WebApplication' project and 'SearchPageBase.cs' file in 'App_Code' folder for an example on how to do it your site.

And that would be all. A nice thing you can do about this is to put 'Searcharoo.Indexer.exe' compiled file somewhere on your server, create a nice scheduled job that will run once a week or once a day (depending how often something is changed) and you will always have a fresh index that your users can use to quickly find information on your site. Indexing usually takes around a few minutes only for smaller sites and is not a resource intensive operation.

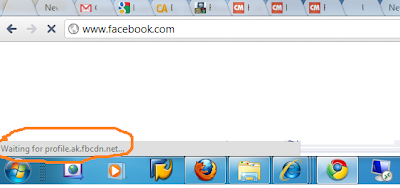

### My indexing was succesfull but i get some error when running webapplication on search.aspx page. the console indexer ran as expected.

however, running the SearchSpider.aspx created the correct files after giving full security permission to web.config and fiving Everyone access to .xml file in catalog folder. And then i was able to search easily.

## One more problem is large xml index file . I have to resolve this.

- Vinod Kotiya

Comments